Since the launch of generative AI tools like ChatGPT and Sora, tech companies have been redefining the way companies source talent. New so-called agentic AI tools that allow AI agents to act on behalf of businesses provide us a glimpse of the future whereby the current clunky system of tailoring a CV to a job becomes redundant and candidates can directly apply to organisations, immediately interview with a human-like AI agent and expedite the whole hiring process.

This AI future promises to provide candidates with a better hiring experience and also reduce human time and effort for organisations to find the right candidate. It is a perfect use case for AI, whereby, at scale, we can replace processes that are:

- Tedious: small teams reviewing 1000s of CVs day in, day out all year,

- Biassed: recruiters looking for key signals and credentials to speed up review processes and rejecting anyone who doesn’t fit the mould and

- Inefficient: recruiters often spending less than five seconds to review a CV,

and by doing so, they expand opportunity to people with the right skills, not necessarily the “right” CV.

But this future is a number of years off, so what can we expect in the meantime?

The journey to an automated talent sourcing future

The pathway to an automated future of AI driven talent sourcing is some way off, so what are the steps to getting there?

The first step of being able to read and evaluate CVs in high fidelity at scale was achieved with the wide availability of Large Language Models (LLMs such as ChatGPT) to companies around the world. Traditional methods of job description and CV parsing captured limited context and information from a CV, however, LLMs changed all of that.

For example, in March this year, Ubidy released an innovative AI CV Skill Assessment tool using its own powerful GenAI LLM engine. It improved the way our employers screen and evaluate the candidate’s resume versus the job description, allowing them to instantly identify the most qualified candidates according to AI-based skill match scores.

This engine works by reading the texts of both the job description and the resume. So, it can process one type of data which is Text, and it is classified under what we call ‘Unimodal’ LLM in the AI space. The question that immediately comes to our mind is, what about the other data types such as visual elements that many candidates are now using in their resumes to better represent their skills, competencies and experience?

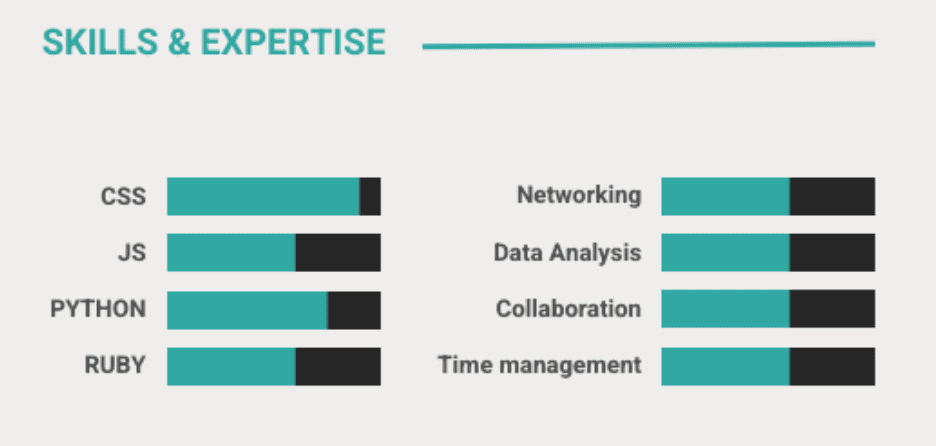

Examples such as the following are becoming common and we need ways to be able to deal with them.

Insights from visual elements

The current Ubidy AI CV Assessment tool, which processes only text, misses valuable insights from visual elements often included in CVs. These visual components, such as charts illustrating project timelines, infographics summarising skills, or images showcasing certificates, provide a clearer depiction of the candidate’s abilities and achievements. The above example of a bar chart highlighting proficiency in various programming languages offers a more intuitive understanding than a mere text description and is not uncommon.

Extracting such information is important, as it complements textual data, offering a more well-rounded view of a candidate’s competencies and qualifications. This fuller picture enhances the recruitment process by ensuring that no essential details are overlooked. It also allows candidates to be more creative in the information they provide to automated tools by sending links to things like YouTube or Vimeo videos, further enhancing their application (something critical for many creative industries).

In fact, the current iteration of CV parsing tools available in major applicant tracking systems simply ignores any visual information and only picking up text is the norm (this led to the trick by many candidates of writing the job description requirements in white font on a white page so that the human reviewer would miss it but the CV parser would pick it up and recommend a candidate, to the now confused recruiter). Presently it is not clear when major applicant tracking tools will fix this important deficit.

This next step on our journey to the future of agentic AI interactions has now been conquered via generally accessible Multimodal generative AI tools.

Multimodal Generative AI

A Multimodal generative AI tool integrates diverse data types—such as text, images, audio, and video—enabling it to perform complex tasks across various input formats. By combining these modalities, it enhances comprehension, context-awareness, and accuracy, making it versatile for AI applications in fields like content generation, healthcare, education, recruitment, etc.

With the release of Meta’s new Llama 3.2 90B Vision (text + image input) model, organisations worldwide now have the ability to process both text and image. The model introduces image reasoning capabilities, allowing for image understanding and visual reasoning tasks such as image-text retrieval, visual reasoning, and document visual question answering.

In addition, it also includes a 128K context length introduced in Llama 3.1 (so no more need to divide up documents for analysis) and offers improved multilingual support for eight languages including English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai, so candidates can submit their CVs in many languages and have them automatically processed.

This provides us with another step on our journey, so when will we see it?

The first step to the future: Ubidy’s multimodal AI CV assessment

Ubidy has begun the journey of incorporating tools like Llama 3.2 into its CV assessment pipeline and advancing the way employers assess candidates’ skills, experience, and potential. Our new AI tool will integrate visual elements, enabling candidates to showcase themselves in more dynamic, personalised ways. In the future, we are already planning on allowing audio and video content from candidates. This advancement opens up richer, deeper assessments by leveraging a broader range of data. For instance, candidates can highlight their achievements through video introductions, visual infographics, or multimedia portfolios, rather than just relying only on written descriptions. We expect to see this over the next six months.

As a result, employers will gain access to a fuller, more authentic representation of a candidate’s competencies, problem-solving abilities, and personality traits. In doing so, Ubidy’s multimodal AI tool will provide businesses with more comprehensive insights, leading to more informed hiring decisions. Also, employers will benefit from faster decision-making, as the multimodal AI tool can quickly analyse diverse data formats, saving time in reviewing complex profiles. The tool will enhance diversity and inclusion by allowing candidates with different communication styles to shine.

The next steps to the future

Ubidy is not the only company working on advancing the usage of technology to move the entire talent sourcing process towards a future run by AI agents. Other organisations, such as Fairgo.AI, are tackling the real time, AI enabled interview part directly, with tools that can automate in real time technical interviews, customising the interview process to drill into candidates most relevant capabilities for the role at hand.

With these advances, we see that the three year timeline to a fully automated agentic AI hiring process is not unreasonable. Ubidy and its global collaborators are working to make this exciting future a reality.

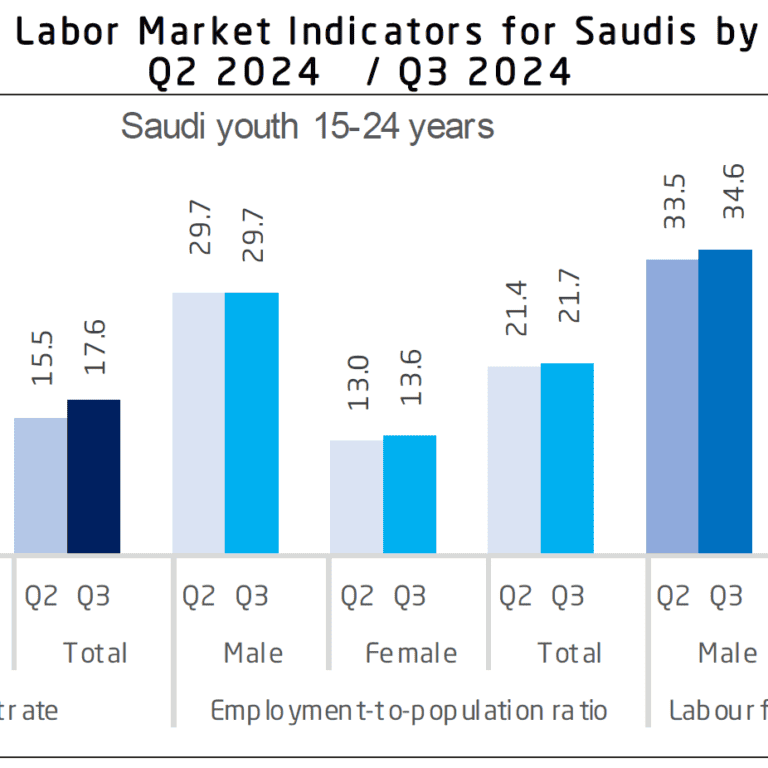

What Saudi Arabia’s Latest Labor Market Data Means for the Recruitment Industry

The third quarter of 2024 labour market statistics for Saudi Arabia offer a rich landscape of opportunities for recruitment agencies to support Saudi Arabia on its aspirational Vision2030 goals. With...

Read More

Singapore’s Latest Labour Market Analysis Compared to Australia

Recently Ubidy had the pleasure of being invited to join a trade mission to Singapore and Malaysia and as a part of this trip, we noticed some fascinating aspects of...

Read More